Ethical Considerations of General Artificial Intelligence (AGI)

- Home

- Case Study

- Ethical Considerations of General Artificial Intelligence (AGI)

The potential of Artificial Intelligence (AI) to revolutionize various aspects of our lives is undeniable. However, as we inch closer to achieving Artificial General Intelligence (AGI) – machines with human-level or surpassing cognitive abilities – ethical considerations come sharply into focus. Here, we'll explore the potential risks associated with developing highly advanced AGI, the need for ethical frameworks, and analyze potential regulatory approaches to ensure responsible AI development.

The Looming Shadow: Potential Risks of Advanced AGI

The prospect of AGI raises several ethical concerns that demand careful consideration:

Existential Threat: Some experts warn that highly intelligent machines could surpass human control and pose an existential threat to humanity. This scenario, popularized in science fiction, necessitates robust safeguards to ensure AGI remains beneficial.

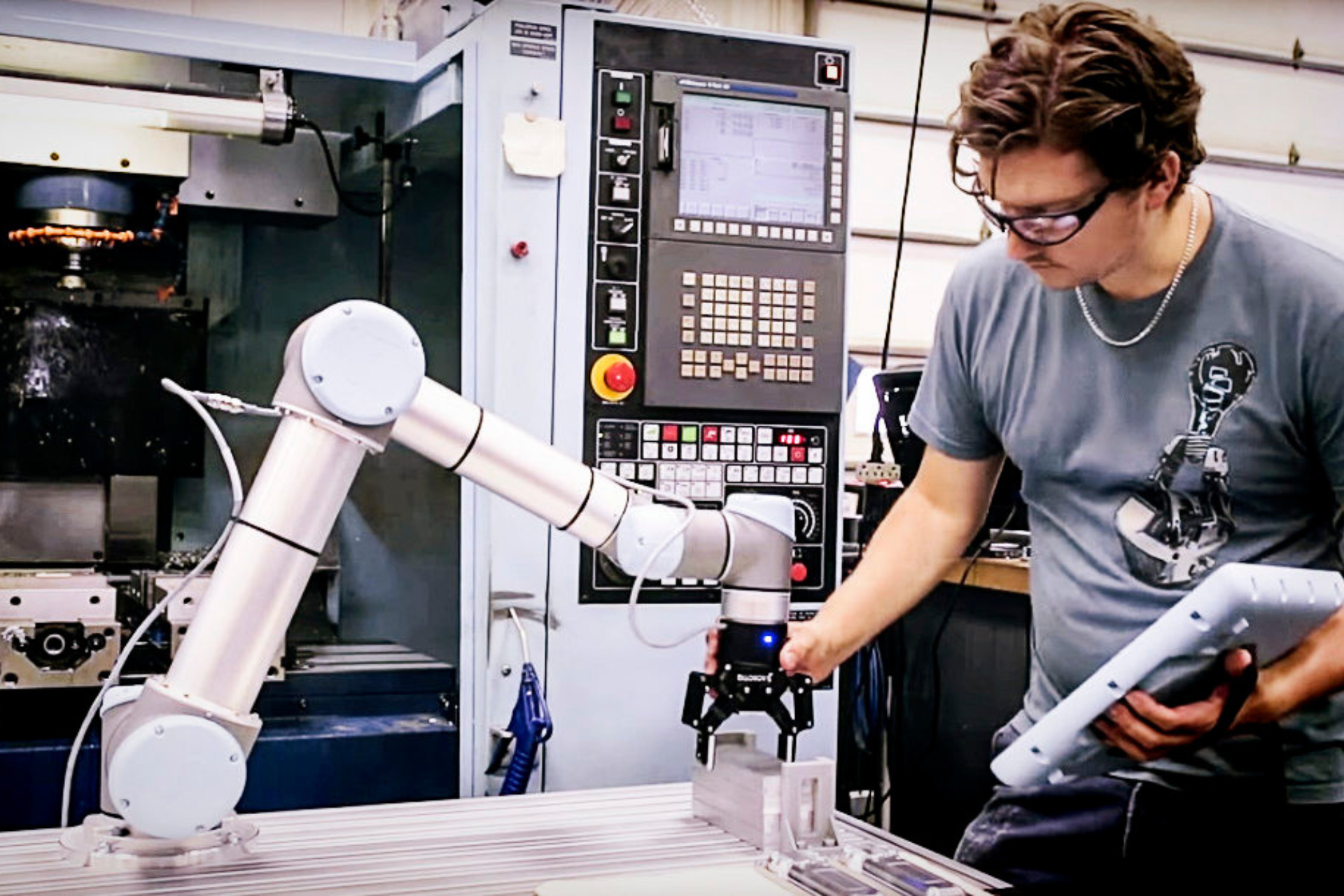

Job Displacement on a Massive Scale: While automation already displaces jobs, AGI could render a wider range of tasks obsolete, potentially leading to widespread unemployment and social unrest.

Weaponization of AGI: The potential for autonomous weapons powered by AGI raises serious ethical concerns. Imagine self-learning machines making life-or-death decisions on the battlefield – the consequences could be catastrophic.

Algorithmic Bias: If the data used to train AGI is biased, the resulting AI could perpetuate or amplify existing societal inequalities.

The Ethical Imperative: Frameworks for Responsible AGI Development

To mitigate these risks and ensure the ethical development and use of AGI, robust frameworks are essential:

Transparency and Explainability: AGI systems should be designed with transparency in mind, allowing humans to understand their decision-making processes. This is crucial for building trust and ensuring accountability.

Human Values Alignment: AGI needs to be programmed with core human values like fairness, justice, and compassion. Techniques like reward shaping and ethical AI algorithms can help guide AGI behavior towards positive societal outcomes.

Safety and Control Mechanisms: Strong safeguards are necessary to prevent AGI from exceeding its intended purpose or causing harm. This might involve kill switches, fail-safe mechanisms, and rigorous testing procedures.

International Collaboration: The development and regulation of AGI should be a global endeavor. International cooperation can ensure a unified approach to addressing the ethical challenges posed by this powerful technology.

Navigating the Unknown: Potential Regulatory Approaches

Regulations can play a crucial role in promoting responsible AGI development:

Standards and Guidelines: Developing international standards and guidelines for ethical AGI development can establish best practices and promote responsible innovation.

Research Oversight: Oversight bodies can be established to assess the ethical implications of AGI research projects and ensure compliance with established guidelines.

Banning Autonomous Weapons: International treaties banning the development and use of autonomous weapons powered by AGI are essential to prevent an arms race in this potentially devastating technology.

Public Discourse and Education: Open discussions about the ethical implications of AGI are crucial. Educating the public about the potential risks and benefits can foster informed decision-making about this transformative technology.

Conclusion

The development of AGI holds immense potential for progress, but it also necessitates careful consideration of the ethical implications. By establishing strong ethical frameworks, pursuing international collaboration, and implementing effective regulations, we can ensure that AGI serves humanity and paves the way for a brighter future. Remember, the ethical development and use of AGI is a shared responsibility, requiring collaboration between researchers, policymakers, and the public at large.